Hello there, every Monday, find here some news about AI that attracted our attention (and maybe should attract yours too !). This week, we discovered an evaluation of the medical capacities of ChatGPT, covered the launching of now open LLMs from Mitral AI, and a new analysis of Open Models trend that shows a great acceleration of availability for Open Generative AI.

The Stanford Institute for Human-Centered AI tested medical capacities of generative AI (and it is not good …)

Stanford Institute for Human-Centered AI, advancing AI research, education, policy, and practice to improve the human condition in an article titled How well do Large Language Models Support Clinician Information Needs show that GPT 4 is not robust enough for use as a medical co-pilot. Using a set of 64 questions from a repository of ~150 clinical questions created as part of the Green Button project, they prompted ChatGPT and measured the quality of the answer. They found that the answers are :

- Non-deterministic: They found low similarity and high variability in responses to the same question. Jaccard and cosine similarity coefficients were merely 0.29 and 0.45 respectively.

- Have bad Accuracy: Only 41% of GPT-4 responses agreed with the known answer to medical questions according to a consensus of 12 physicians.

- Can potentially harm: 7% of answers were deemed potentially harmful by the consensus physicians.

You can read the article here.

Mixtral 8x7b released the 11th of december !

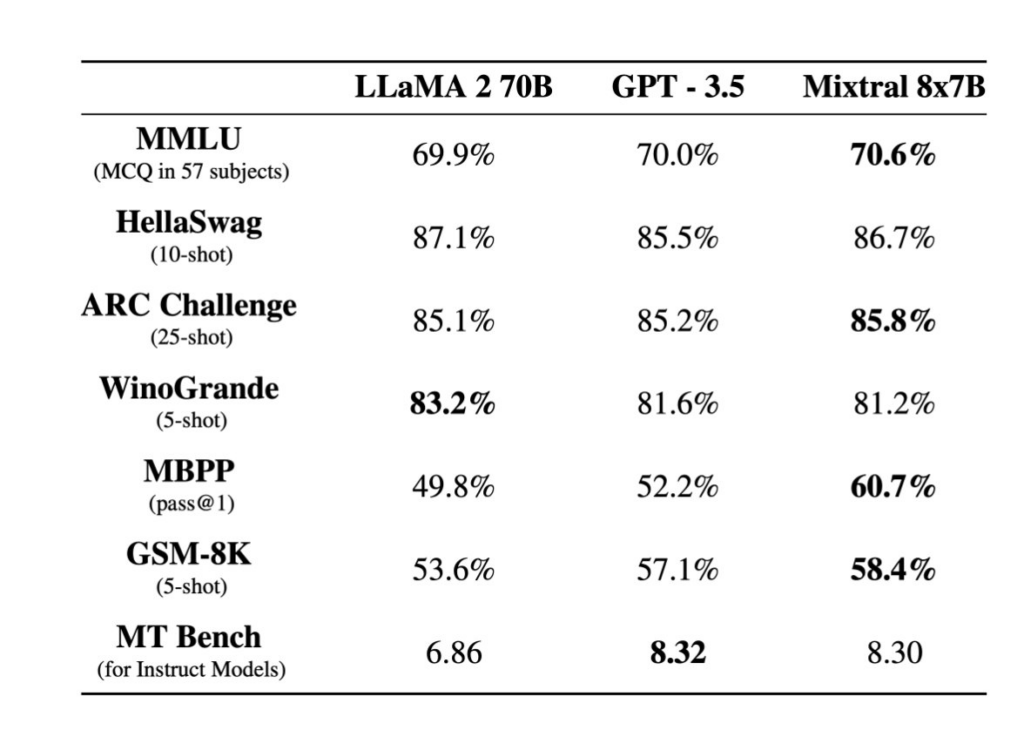

The Mistral teams revealed its last version of Mixtral LLM at Neurips. Mixtral 8x7B is an open-weight mixture of expert models. Mixtral matches or outperforms Llama 2 70B and GPT3.5 on most benchmarks, and has the inference speed of a 12B dense model. It supports a context length of 32k tokens. Mixtral has a similar architecture as Mistral 7B, with the difference that each layer is composed of 8 feedforward blocks. For every token, at each layer, a router network selects two experts to process the current state and combine their outputs. Mixtral has been trained on a lot of multilingual data and significantly outperforms Llama 2 70B on French, German, Spanish, and Italian benchmarks.

Mistral AI is now a major player in the field of generative models. Mistral AI, the French artificial intelligence start-up founded in May 2023 by industry heavyweights, announced on Sunday, December 10 that it had raised €385 million, becoming one of Europe’s two AI champions. The French venture founded by three French AI experts, trained at École Polytechnique and ENS is now valued at some $2 billion. Mistral’s ambitions is to become the leading supporter of the open generative AI community and bring open models to state-of-the-art performance.

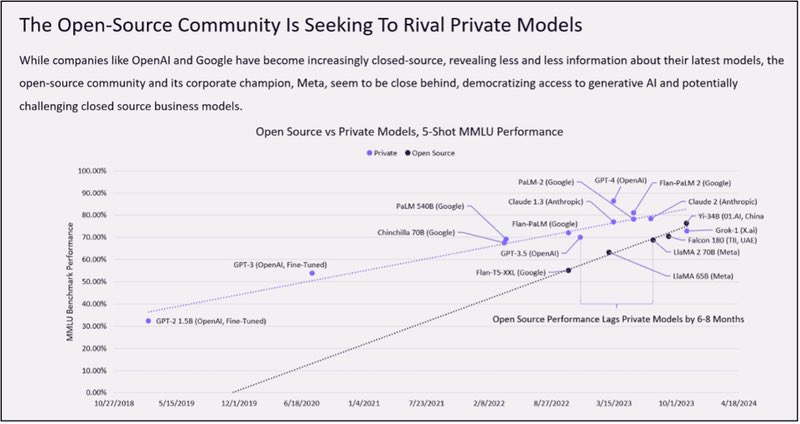

AI Open source community is gaining traction and seek to rival private models

Open-source generative artificial intelligence (AI) models are gaining ground, challenging the dominance of centralized cloud-backed models like ChatGPT. Leading players in the generative AI field, such as Google and OpenAI, have traditionally followed a centralized approach, restricting public access to their data sources and training models. research conducted by Cathy Wood’s ARK Invest suggests a potential shift towards open-source AI models outperforming their centralized counterparts by 2024.