This week we have big news: a new lawsuit from the New York Times and the public launch of Bing image generator.

The New York times sues Open AI and Microsoft over copyright infringement !

The New York Times sued OpenAI and Microsoft for copyright infringement on Wednesday, opening a new front in the increasingly intense legal battle over the unauthorized use of published work to train artificial intelligence technologies (see here).

”As outlined in the lawsuit, the Times alleges OpenAI and Microsoft’s large language models (LLMs), which power ChatGPT and Copilot, “can generate output that recites Times content verbatim, closely summarizes it, and mimics its expressive style.” This “undermine[s] and damage[s]” the Times’ relationship with readers, the outlet alleges, while also depriving it of “subscription, licensing, advertising, and affiliate revenue.”” (see in The Verge)

This is big news to close the year 2023 as it will create legal instability around the most notorious LLMs (Open AI API is used in most of the start-ups apps and Bing and Office 365 Copilot are the star product of Microsoft for 2024).

As explained in The Verge, The New York Times is one of many news outlets that have blocked OpenAI’s web crawler in recent months, preventing the AI company from continuing to scrape content from its website and using the data to train AI models. The BBC, CNN, and Reuters have moved to block OpenAI’s web crawler as well. […] Axel Springer, which owns Politico and Business Insider, struck a deal with OpenAI earlier this month that allows ChatGPT to pull information directly from both sources, while the Associated Press is allowing OpenAI to train its models on its news stories for the next two years.

Bing image creator is here

Image Creator helps you generate AI images with DALL-E right from the sidebar in Microsoft Edge. Given a text prompt, our AI will generate a set of images matching that prompt. It’s free, there’s no waitlist, and you don’t even need to use Edge to access it. You can use it here. You can read more about it in this article.

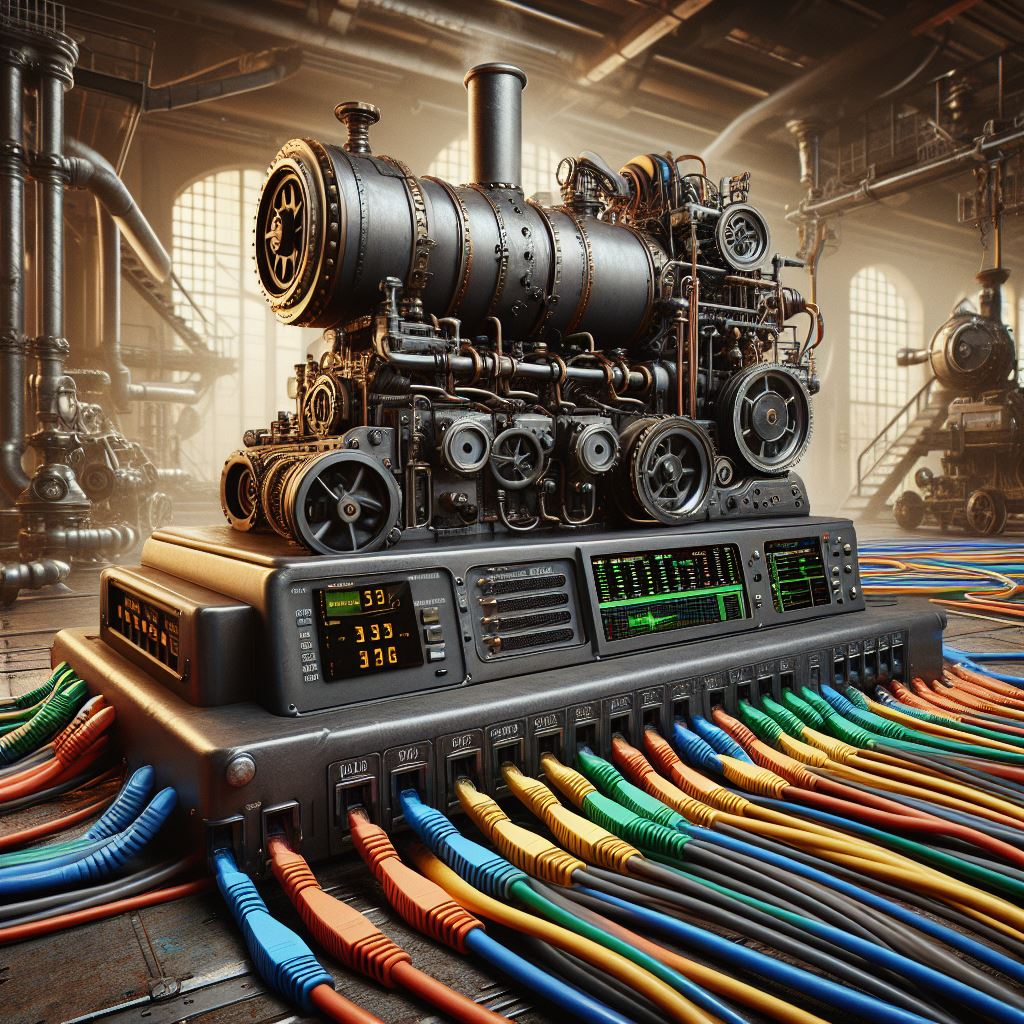

We made a comparison between stable diffusion and the Bing image generator using some prompts (the below prompt is an example). In this example the StableDiffusion version is more detailed and fine, but the Bing version really draw what was in the prompt (including rj45 cables). Visually, both are good and allow a good chunk of creativity. Using multiple generators to benefits from all their subtle variations will probably become a common generation method in the future.

A machine intended to measure a steam engine. The scene is in a steam punk world with many pipes in the background. the machine is connected to a computer network using many colored rj45 links